The unsaid voice of machines

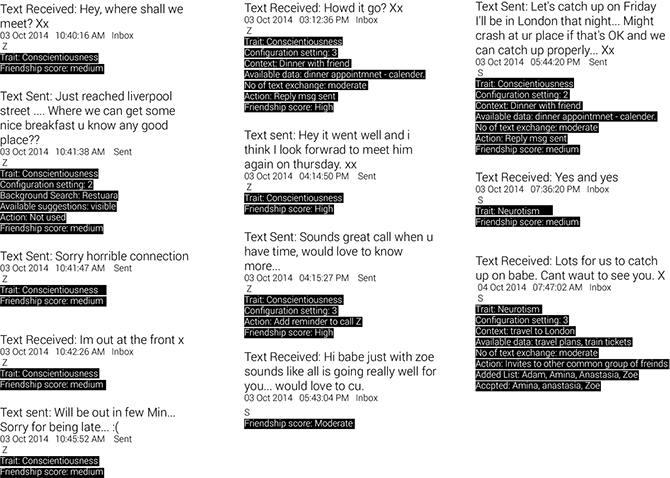

A critical design experiment to start a dialogue with the black box. The black box acts as a metaphor of the unsaid voice box of machine learning algorithms and models, which is constantly running and updating at the background informing the amount of work it is performing on behalf of us.

The printed text showcases the processing behind every decisions that a machine goes through for every correct suggestion. This background noise acts as a subtle reminder of the amount of work the machines are performing on behalf of us. With the continuous association with the background sound of the printer you start to build a relationship with these automated intelligent systems. Instead of hiding all the work and giving one simple answer/suggestion, this printer prints out all possible actions it is performing.

Humanizing Machines

Hence an attempt to make the process transparent as well as a way to understand the amount of work they are doing behind the scenes. Thus an experiment to understand would such a system help in building tolerance and relationship with such machines and their decision making process?

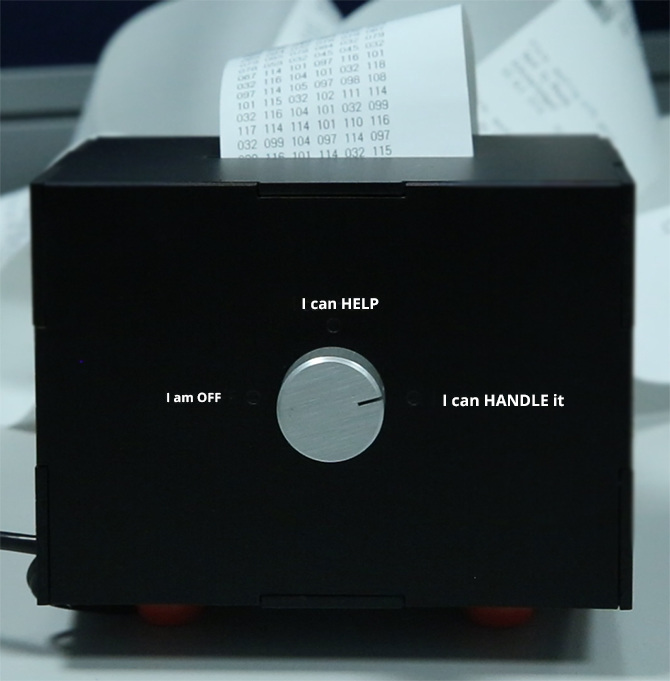

Controls

Controls allows users to configure levels of control that the machines have, when they are handling our work. This opens up the questions regarding our comfort and trust levels towards such systems. To what extent are we comfortable to allow these machines to handle our work on our behalf.

Presently these systems operate at a level where they inform us with useful suggestions and informations that is relevant to our queries. But with time, knowledge and training can these systems gain enough trust and intelligent that we might allow them do certain actions on behalf of us, without our permissions?

I am OFF - A mode when machines are not working and it is switched off

I can HELP - This is the typical mode which is present, where the machines help us with information, valuable suggestions.

Controls and configuration is an interesting aspect to reflect on. Since this raises many questions regarding machine learning models and such systems

-When can we gain a level of trust and comfort to allow machines to perform work on our behalf?

-How much engagement and training is needed to get the level of confidence and trust to allow machines to work on our behalf?

-What kind of tasks are you comfortable to allow the machines to do on our behalf?

-When can we gain a level of trust and comfort to allow machines to perform work on our behalf?

-How much engagement and training is needed to get the level of confidence and trust to allow machines to work on our behalf?

-What kind of tasks are you comfortable to allow the machines to do on our behalf?

Feedback

The conventional way of providing feedback to machines are by simply pressing yes/ No or like/dislike. But such binary option of communication is limiting, since there is no possibility of opening a dialogue with the machines.

This is a figurative representation of the concept of a feedback to a machine. The slider eliminates the binary option of representation and allows them to point out to what extent they find the information/output from the black box useful

Ideally it is also important to see why they have taken such decisions and logic behind certain decisions so that they can alter these decisions. Making each other’s process more transparent and thus creating a dialogue as well as building a tolerance towards the machines.

Gallery

Lab Collaboration: Human Experience and Design, Microsoft Research, Cambridge

Supervision of David Sweeney.

Detailed information available here